So you getting tons of discarded packets on your windows VM hosted by VMWARE? I have run across this scenario in high load situations such as domain controllers with LOTS of traffic. I have found a few tricks to help address this issue.

Determining the current state of things: For me – this approach has sort of been a “one size, does NOT fit all” solution” so you may have to feel out your individual situation and do what you feel makes the most sense.

How many discarded packets are you seeing and how fast are they happening?

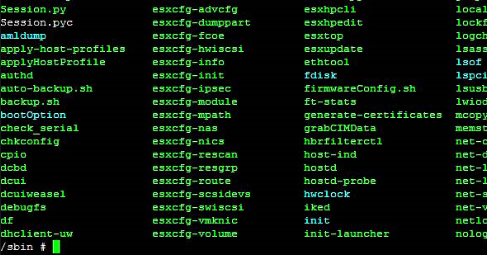

There are a few ways to do this from the guest – but I would recommend SSH’ing to the VM Host and doing it. To do this:

1. Open Putty and SSH to the host

2. Then we need to find the vSwitch name and VM PortNumber. To do this, maximize the puttye session screen and type “net-states -l” without the quotes. You will be shown a list of all the VM’s on that host. Find the one with issues and take note of the “PortNum” and “Switch Name”.

3. Type “vsish -e get /net/portsets/vSwitchNameHere/ports/PortNumberHere/vmxnet3/rxSummary” and hit enter.

4. Check the following settings:

A: # of time 1st/2nd ring is full

B: running out of buffers

If your “running out of buffers” integer is high, this is letting you know that your buffer size is too small on the guest OS. Check the “Rx Ring #1 Size” and “Rx Ring #2 Size” from within the adapter properties in the OS. Typically this is set to “Not Present” in the OS, you should bump this number up depending on your environment. I set ours to 2048 and this made a huge difference.

TOE/Off-loading/RSS

While your in the nic settings, check to make sure that :

A. RSS is enabled (This allows your VM to use more than one CPU to handle requests, this is huge and resolves most of the problem if it needs to be turned on)

B. Dissable all offloading (This isnt as much a problem on the E1000 interfaces but the VMXNet3, this just ends up wasting compute cycles.

A Note about RSS : Windows acts a little strange when it allocates the RX Queues. Our VM had 6 CPUs, With RSS disabled we had 1 queue. With RSS enabled we had 4. We added 2 more vCPU’s and we now have 8 queues. From a OSI Stack perspective the number of queues greatly impacts the systems ability to deal with inbound connection attempts.

To check the number of queues your VM is leveraging via RSS, SSH into the host and type :

“vsish -e ls /net/portsets/vSwitchNameHere/ports/PortNumberHere/vmxnet3/rxqueues/”

(Obviously, no quotes)

Slapped this together fast, let me know if you guys find anything else that should be added.